[ad_1]

The search engine optimization sport has so many shifting components that it typically looks like, as quickly as we’re achieved optimizing one a part of an internet site, we now have to maneuver again to the half we had been simply engaged on.

When you’re out of the “I’m new right here” stage and really feel that you’ve some actual search engine optimization expertise underneath your belt, you may begin to really feel that there are some issues you may commit much less time to correcting.

Indexability and crawl budgets might be two of these issues, however forgetting about them can be a mistake.

I all the time prefer to say {that a} web site with indexability points is a website that’s in its personal manner; that web site is inadvertently telling Google to not rank its pages as a result of they don’t load appropriately or they redirect too many occasions.

In the event you suppose you may’t or shouldn’t be devoting time to the decidedly not-so-glamorous job of fixing your website’s indexability, suppose once more.

Indexability issues could cause your rankings to plummet and your website visitors to dry up rapidly.

So, your crawl finances needs to be prime of thoughts.

On this publish, I’ll current you with 11 tricks to take into account as you go about enhancing your web site’s indexability.

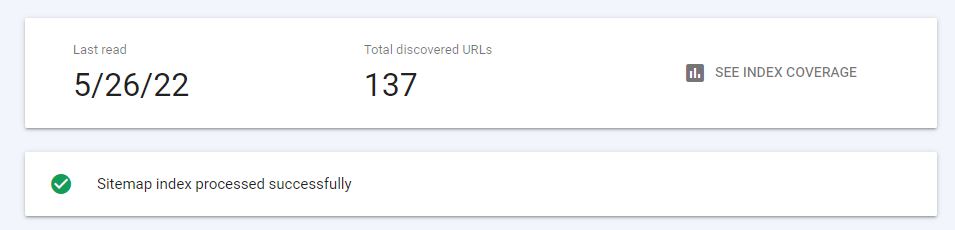

1. Observe Crawl Standing With Google Search Console

Errors in your crawl standing might be indicative of a deeper challenge in your website.

Checking your crawl standing each 30-60 days is vital to determine potential errors which can be impacting your website’s general advertising and marketing efficiency.

It’s actually step one of search engine optimization; with out it, all different efforts are null.

Proper there on the sidebar, you’ll be capable to examine your crawl standing underneath the index tab.

Screenshot by creator, Might 2022

Screenshot by creator, Might 2022 Screenshot by creator, Might 2022

Screenshot by creator, Might 2022Now, if you wish to take away entry to a sure webpage, you may inform Search Console immediately. That is helpful if a web page is quickly redirected or has a 404 error.

A 410 parameter will completely take away a web page from the index, so watch out for utilizing the nuclear choice.

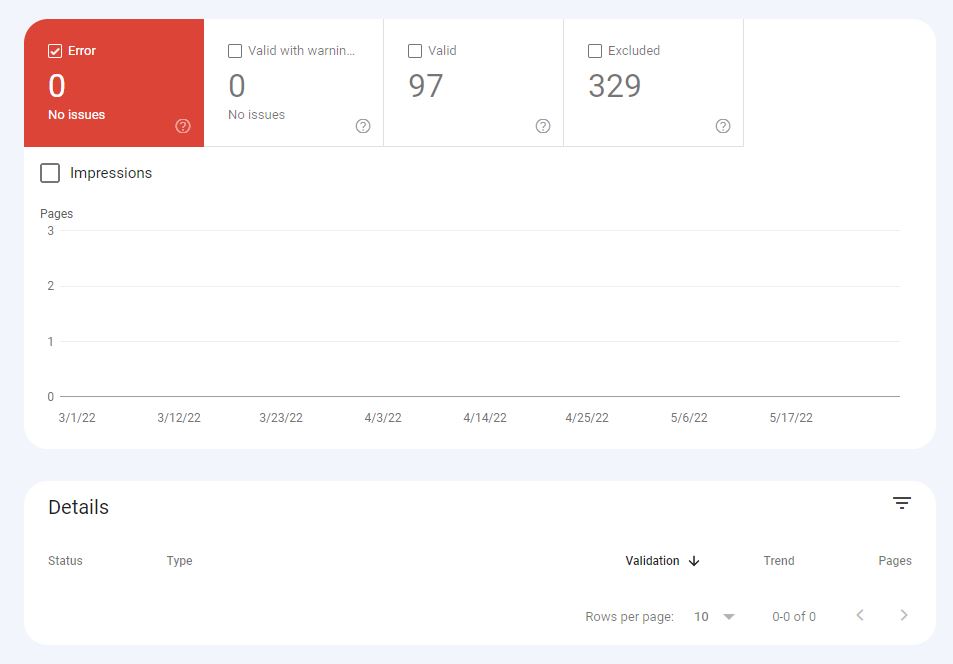

Frequent Crawl Errors & Options

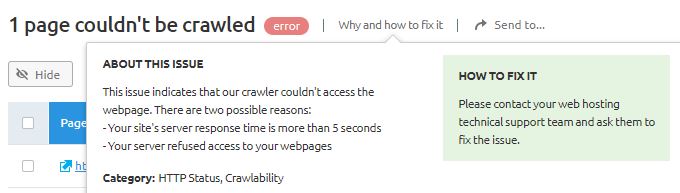

In case your web site is unlucky sufficient to be experiencing a crawl error, it might require a simple answer or be indicative of a a lot bigger technical drawback in your website.

The most typical crawl errors I see are:

Screenshot by creator, Might 2022

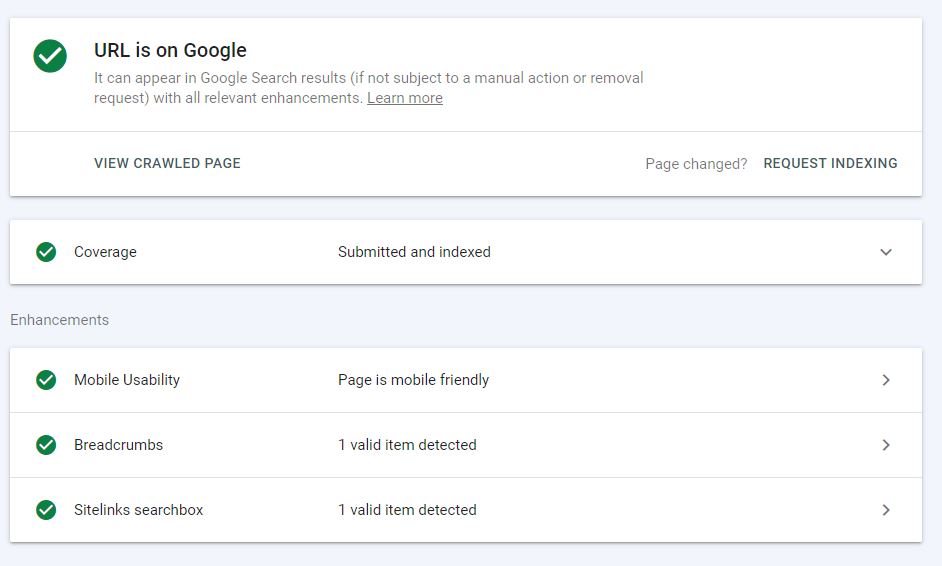

Screenshot by creator, Might 2022To diagnose a few of these errors, you may leverage the URL Inspection software to see how Google views your website.

Failure to correctly fetch and render a web page might be indicative of a deeper DNS error that may have to be resolved by your DNS supplier.

Screenshot by creator, Might 2022

Screenshot by creator, Might 2022Resolving a server error requires diagnosing a particular error. The most typical errors embrace:

- Timeout.

- Connection refused.

- Join failed.

- Join timeout.

- No response.

More often than not, a server error is normally momentary, though a persistent drawback might require you to contact your internet hosting supplier immediately.

Robots.txt errors, however, might be extra problematic on your website. In case your robots.txt file is returning a 200 or 404 error, it means engines like google are having problem retrieving this file.

You can submit a robots.txt sitemap or keep away from the protocol altogether, opting to manually noindex pages that might be problematic on your crawl.

Resolving these errors rapidly will be sure that your whole goal pages are crawled and listed the following time engines like google crawl your website.

2. Create Cellular-Pleasant Webpages

With the arrival of the mobile-first index, we should additionally optimize our pages to show mobile-friendly copies on the cell index.

The excellent news is {that a} desktop copy will nonetheless be listed and displayed underneath the cell index if a mobile-friendly copy doesn’t exist. The dangerous information is that your rankings could endure in consequence.

There are lots of technical tweaks that may immediately make your web site extra mobile-friendly together with:

- Implementing responsive net design.

- Inserting the point of view meta tag in content material.

- Minifying on-page sources (CSS and JS).

- Tagging pages with the AMP cache.

- Optimizing and compressing pictures for sooner load occasions.

- Lowering the dimensions of on-page UI components.

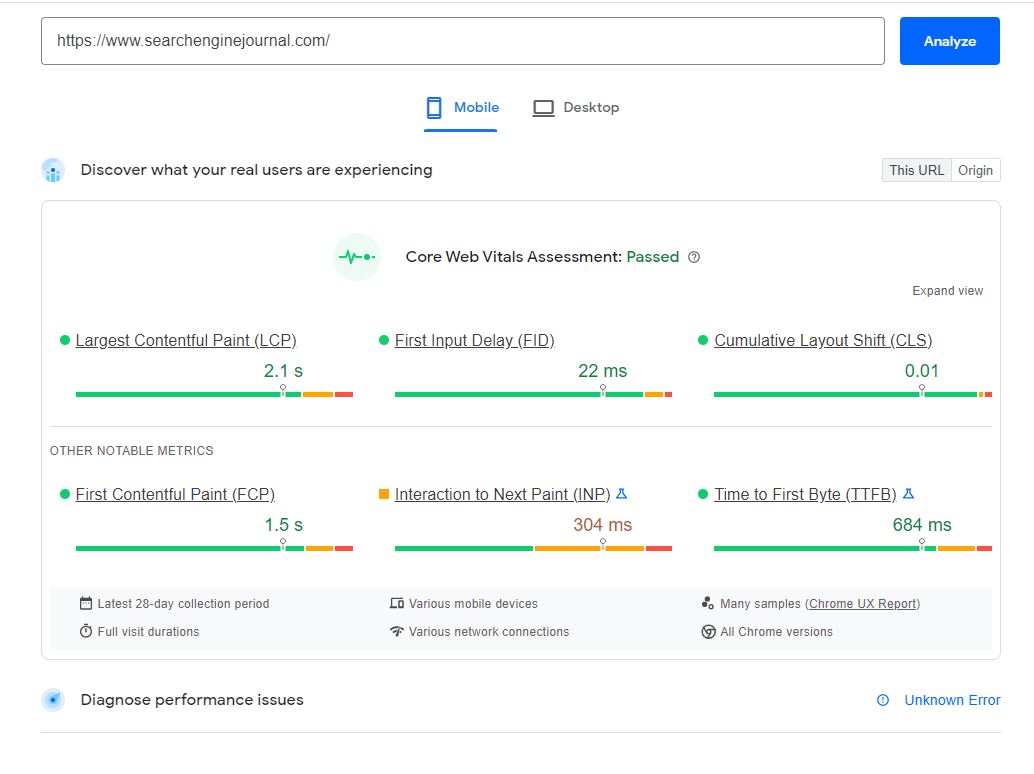

You’ll want to check your web site on a cell platform and run it by way of Google PageSpeed Insights. Web page velocity is a crucial rating issue and may have an effect on the velocity at which engines like google can crawl your website.

3. Replace Content material Usually

Search engines like google and yahoo will crawl your website extra commonly when you produce new content material regularly.

That is particularly helpful for publishers who want new tales printed and listed regularly.

Producing content material regularly sign to engines like google that your website is continually enhancing and publishing new content material, and subsequently must be crawled extra typically to achieve its meant viewers.

4. Submit A Sitemap To Every Search Engine

The most effective ideas for indexation to at the present time stays to submit a sitemap to Google Search Console and Bing Webmaster Instruments.

You possibly can create an XML model utilizing a sitemap generator or manually create one in Google Search Console by tagging the canonical model of every web page that incorporates duplicate content material.

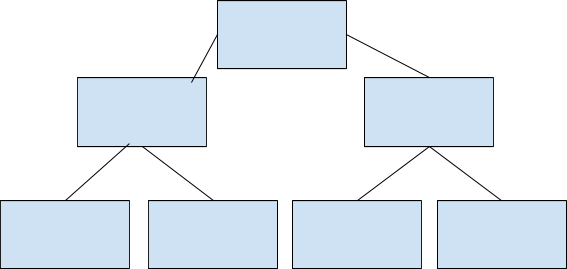

5. Optimize Your Interlinking Scheme

Establishing a constant data structure is essential to making sure that your web site just isn’t solely correctly listed, but additionally correctly organized.

Creating essential service classes the place associated webpages can sit can additional assist engines like google correctly index webpage content material underneath sure classes when the intent might not be clear.

Screenshot by creator, Might 2022

Screenshot by creator, Might 20226. Deep Hyperlink To Remoted Webpages

If a webpage in your website or a subdomain is created in isolation or an error stopping it from being crawled, you will get it listed by buying a hyperlink on an exterior area.

That is an particularly helpful technique for selling new items of content material in your web site and getting it listed faster.

Watch out for syndicating content material to perform this as engines like google could ignore syndicated pages, and it might create duplicate errors if not correctly canonicalized.

7. Minify On-Web page Sources & Improve Load Instances

Forcing engines like google to crawl giant and unoptimized pictures will eat up your crawl finances and forestall your website from being listed as typically.

Search engines like google and yahoo even have problem crawling sure backend components of your web site. For instance, Google has traditionally struggled to crawl JavaScript.

Even sure sources like Flash and CSS can carry out poorly over cell units and eat up your crawl finances.

In a way, it’s a lose-lose state of affairs the place web page velocity and crawl finances are sacrificed for obtrusive on-page components.

You’ll want to optimize your webpage for velocity, particularly over cell, by minifying on-page sources, comparable to CSS. You may also allow caching and compression to assist spiders crawl your website sooner.

Screenshot by creator, Might 2022

Screenshot by creator, Might 20228. Repair Pages With Noindex Tags

Over the course of your web site’s growth, it might make sense to implement a noindex tag on pages which may be duplicated or solely meant for customers who take a sure motion.

Regardless, you may determine webpages with noindex tags which can be stopping them from being crawled by utilizing a free on-line software like Screaming Frog.

The Yoast plugin for WordPress lets you simply swap a web page from index to noindex. You can additionally do that manually within the backend of pages in your website.

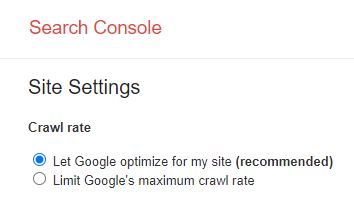

9. Set A Customized Crawl Fee

Within the outdated model of Google Search Console, you may truly sluggish or customise the velocity of your crawl charges if Google’s spiders are negatively impacting your website.

This additionally offers your web site time to make mandatory modifications whether it is going by way of a major redesign or migration.

Screenshot by creator, Might 2022

Screenshot by creator, Might 202210. Remove Duplicate Content material

Having huge quantities of duplicate content material can considerably decelerate your crawl price and eat up your crawl finances.

You possibly can get rid of these issues by both blocking these pages from being listed or putting a canonical tag on the web page you want to be listed.

Alongside the identical strains, it pays to optimize the meta tags of every particular person web page to stop engines like google from mistaking comparable pages as duplicate content material of their crawl.

11. Block Pages You Don’t Need Spiders To Crawl

There could also be cases the place you wish to forestall engines like google from crawling a particular web page. You possibly can accomplish this by the next strategies:

- Inserting a noindex tag.

- Inserting the URL in a robots.txt file.

- Deleting the web page altogether.

This could additionally assist your crawls run extra effectively, as a substitute of forcing engines like google to pour by way of duplicate content material.

Conclusion

The state of your web site’s crawlability issues will roughly depend upon how a lot you’ve been staying present with your personal search engine optimization.

In the event you’re tinkering within the again finish on a regular basis, you will have recognized these points earlier than they acquired out of hand and began affecting your rankings.

In the event you’re undecided, although, run a fast scan in Google Search Console to see the way you’re doing.

The outcomes can actually be instructional!

Extra Sources:

Featured Picture: Ernie Janes/Shutterstock

[ad_2]

Source link

Leave a Comment