[ad_1]

Google gives PageSpeed Insights API to assist search engine optimisation professionals and builders by mixing real-world information with simulation information, offering load efficiency timing information associated to internet pages.

The distinction between the Google PageSpeed Insights (PSI) and Lighthouse is that PSI entails each real-world and lab information, whereas Lighthouse performs a web page loading simulation by modifying the connection and user-agent of the machine.

One other level of distinction is that PSI doesn’t provide any info associated to internet accessibility, search engine optimisation, or progressive internet apps (PWAs), whereas Lighthouse offers the entire above.

Thus, once we use PageSpeed Insights API for the majority URL loading efficiency check, we received’t have any information for accessibility.

Nonetheless, PSI offers extra info associated to the web page velocity efficiency, reminiscent of “DOM Measurement,” “Deepest DOM Baby Aspect,” “Whole Job Rely,” and “DOM Content material Loaded” timing.

Yet another benefit of the PageSpeed Insights API is that it provides the “noticed metrics” and “precise metrics” completely different names.

On this information, you’ll be taught:

- Tips on how to create a production-level Python Script.

- Tips on how to use APIs with Python.

- Tips on how to assemble information frames from API responses.

- Tips on how to analyze the API responses.

- Tips on how to parse URLs and course of URL requests’ responses.

- Tips on how to retailer the API responses with correct construction.

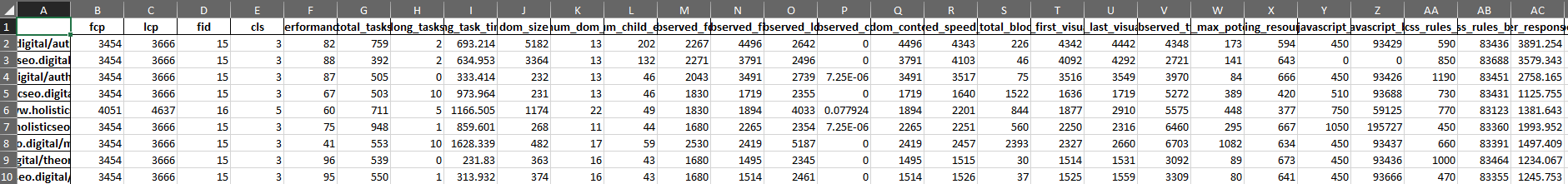

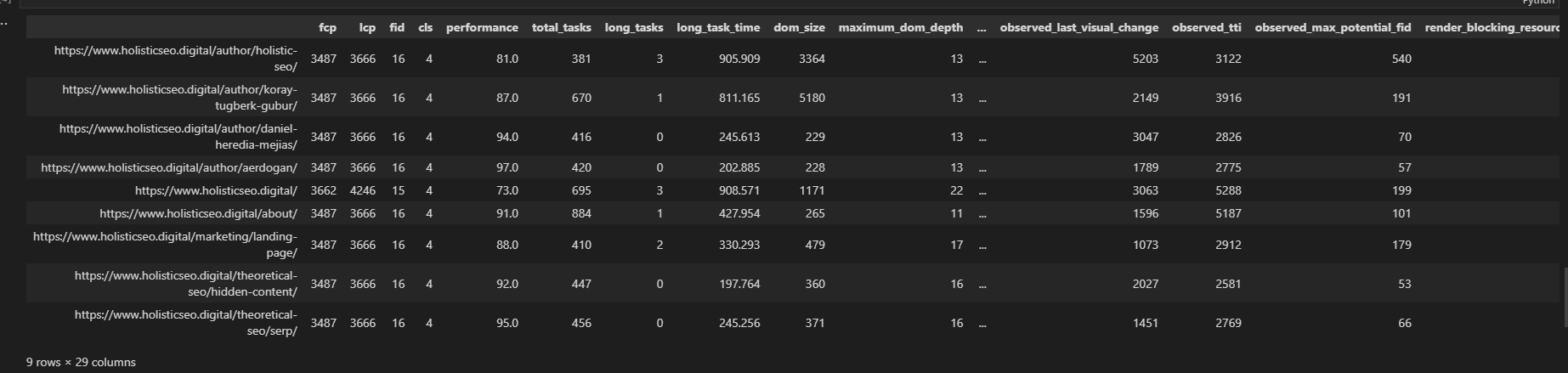

An instance output of the Web page Pace Insights API name with Python is under.

Screenshot from creator, June 2022

Screenshot from creator, June 2022Page Contents

Libraries For Utilizing PageSpeed Insights API With Python

The required libraries to make use of PSI API with Python are under.

- Advertools retrieves testing URLs from the sitemap of an internet site.

- Pandas is to assemble the information body and flatten the JSON output of the API.

- Requests are to make a request to the particular API endpoint.

- JSON is to take the API response and put it into the particularly associated dictionary level.

- Datetime is to switch the particular output file’s identify with the date of the second.

- URLlib is to parse the check topic web site URL.

How To Use PSI API With Python?

To make use of the PSI API with Python, observe the steps under.

- Get a PageSpeed Insights API key.

- Import the required libraries.

- Parse the URL for the check topic web site.

- Take the Date of Second for file identify.

- Take URLs into an inventory from a sitemap.

- Select the metrics that you really want from PSI API.

- Create a For Loop for taking the API Response for all URLs.

- Assemble the information body with chosen PSI API metrics.

- Output the ends in the type of XLSX.

1. Get PageSpeed Insights API Key

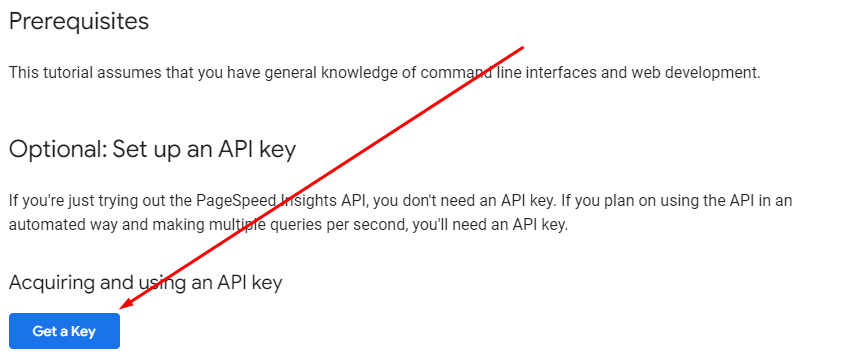

Use the PageSpeed Insights API Documentation to get the API Key.

Click on the “Get a Key” button under.

Picture from builders.google.com, June 2022

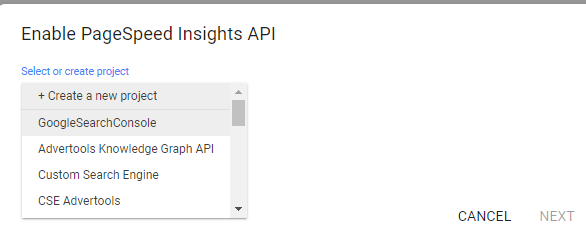

Picture from builders.google.com, June 2022Select a venture that you’ve created in Google Developer Console.

Picture from builders.google.com, June 2022

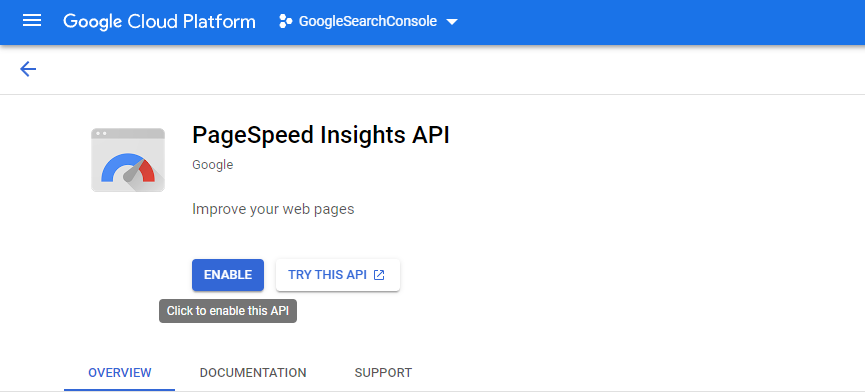

Picture from builders.google.com, June 2022Allow the PageSpeed Insights API on that particular venture.

Picture from builders.google.com, June 2022

Picture from builders.google.com, June 2022You will want to make use of the particular API Key in your API Requests.

2. Import The Essential Libraries

Use the traces under to import the elemental libraries.

import advertools as adv

import pandas as pd

import requests

import json

from datetime import datetime

from urllib.parse import urlparse

3. Parse The URL For The Check Topic Web site

To parse the URL of the topic web site, use the code construction under.

area = urlparse(sitemap_url)

area = area.netloc.cut up(".")[1]

The “area” variable is the parsed model of the sitemap URL.

The “netloc” represents the particular URL’s area part. Once we cut up it with the “.” it takes the “center part” which represents the area identify.

Right here, “0” is for “www,” “1” for “area identify,” and “2” is for “area extension,” if we cut up it with “.”

4. Take The Date Of Second For File Title

To take the date of the particular perform name second, use the “datetime.now” methodology.

Datetime.now offers the particular time of the particular second. Use the “strftime” with the “%Y”, “”%m”, and “%d” values. “%Y” is for the yr. The “%m” and “%d” are numeric values for the particular month and the day.

date = datetime.now().strftime("%Y_percentm_percentd")

5. Take URLs Into A Record From A Sitemap

To take the URLs into an inventory kind from a sitemap file, use the code block under.

sitemap = adv.sitemap_to_df(sitemap_url) sitemap_urls = sitemap["loc"].to_list()

In case you learn the Python Sitemap Well being Audit, you possibly can be taught additional details about the sitemaps.

6. Select The Metrics That You Need From PSI API

To decide on the PSI API response JSON properties, it is best to see the JSON file itself.

It’s extremely related to the studying, parsing, and flattening of JSON objects.

It’s even associated to Semantic search engine optimisation, due to the idea of “directed graph,” and “JSON-LD” structured information.

On this article, we received’t give attention to analyzing the particular PSI API Response’s JSON hierarchies.

You’ll be able to see the metrics that I’ve chosen to assemble from PSI API. It’s richer than the fundamental default output of PSI API, which solely provides the Core Net Vitals Metrics, or Pace Index-Interplay to Subsequent Paint, Time to First Byte, and First Contentful Paint.

After all, it additionally provides “recommendations” by saying “Keep away from Chaining Essential Requests,” however there isn’t a must put a sentence into a knowledge body.

Sooner or later, these recommendations, and even each particular person chain occasion, their KB and MS values could be taken right into a single column with the identify “psi_suggestions.”

For a begin, you possibly can verify the metrics that I’ve chosen, and an necessary quantity of them can be first for you.

PSI API Metrics, the primary part is under.

fid = []

lcp = []

cls_ = []

url = []

fcp = []

performance_score = []

total_tasks = []

total_tasks_time = []

long_tasks = []

dom_size = []

maximum_dom_depth = []

maximum_child_element = []

observed_fcp = []

observed_fid = []

observed_lcp = []

observed_cls = []

observed_fp = []

observed_fmp = []

observed_dom_content_loaded = []

observed_speed_index = []

observed_total_blocking_time = []

observed_first_visual_change = []

observed_last_visual_change = []

observed_tti = []

observed_max_potential_fid = []

This part consists of all of the noticed and simulated elementary web page velocity metrics, together with some non-fundamental ones, like “DOM Content material Loaded,” or “First Significant Paint.”

The second part of PSI Metrics focuses on potential byte and time financial savings from the unused code quantity.

render_blocking_resources_ms_save = []

unused_javascript_ms_save = []

unused_javascript_byte_save = []

unused_css_rules_ms_save = []

unused_css_rules_bytes_save = []

A 3rd part of the PSI metrics focuses on server response time, responsive picture utilization advantages, or not, utilizing harms.

possible_server_response_time_saving = []

possible_responsive_image_ms_save = []

Be aware: General Efficiency Rating comes from “performance_score.”

7. Create A For Loop For Taking The API Response For All URLs

The for loop is to take the entire URLs from the sitemap file and use the PSI API for all of them one after the other. The for loop for PSI API automation has a number of sections.

The primary part of the PSI API for loop begins with duplicate URL prevention.

Within the sitemaps, you possibly can see a URL that seems a number of instances. This part prevents it.

for i in sitemap_urls[:9]:

# Stop the duplicate "/" trailing slash URL requests to override the data.

if i.endswith("/"):

r = requests.get(f"

else:

r = requests.get(f"

Keep in mind to verify the “api_key” on the finish of the endpoint for PageSpeed Insights API.

Test the standing code. Within the sitemaps, there is likely to be non-200 standing code URLs; these ought to be cleaned.

if r.status_code == 200:

#print(r.json())

data_ = json.hundreds(r.textual content)

url.append(i)

The following part appends the particular metrics to the particular dictionary that we have now created earlier than “_data.”

fcp.append(data_["loadingExperience"]["metrics"]["FIRST_CONTENTFUL_PAINT_MS"]["percentile"])

fid.append(data_["loadingExperience"]["metrics"]["FIRST_INPUT_DELAY_MS"]["percentile"])

lcp.append(data_["loadingExperience"]["metrics"]["LARGEST_CONTENTFUL_PAINT_MS"]["percentile"])

cls_.append(data_["loadingExperience"]["metrics"]["CUMULATIVE_LAYOUT_SHIFT_SCORE"]["percentile"])

performance_score.append(data_["lighthouseResult"]["categories"]["performance"]["score"] * 100)

Subsequent part focuses on “complete activity” depend, and DOM Measurement.

total_tasks.append(data_["lighthouseResult"]["audits"]["diagnostics"]["details"]["items"][0]["numTasks"])

total_tasks_time.append(data_["lighthouseResult"]["audits"]["diagnostics"]["details"]["items"][0]["totalTaskTime"])

long_tasks.append(data_["lighthouseResult"]["audits"]["diagnostics"]["details"]["items"][0]["numTasksOver50ms"])

dom_size.append(data_["lighthouseResult"]["audits"]["dom-size"]["details"]["items"][0]["value"])

The following part takes the “DOM Depth” and “Deepest DOM Aspect.”

maximum_dom_depth.append(data_["lighthouseResult"]["audits"]["dom-size"]["details"]["items"][1]["value"])

maximum_child_element.append(data_["lighthouseResult"]["audits"]["dom-size"]["details"]["items"][2]["value"])

The following part takes the particular noticed check outcomes throughout our Web page Pace Insights API.

observed_dom_content_loaded.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["observedDomContentLoaded"])

observed_fid.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["observedDomContentLoaded"])

observed_lcp.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["largestContentfulPaint"])

observed_fcp.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["firstContentfulPaint"])

observed_cls.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["totalCumulativeLayoutShift"])

observed_speed_index.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["observedSpeedIndex"])

observed_total_blocking_time.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["totalBlockingTime"])

observed_fp.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["observedFirstPaint"])

observed_fmp.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["firstMeaningfulPaint"])

observed_first_visual_change.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["observedFirstVisualChange"])

observed_last_visual_change.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["observedLastVisualChange"])

observed_tti.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["interactive"])

observed_max_potential_fid.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["maxPotentialFID"])

The following part takes the Unused Code quantity and the wasted bytes, in milliseconds together with the render-blocking assets.

render_blocking_resources_ms_save.append(data_["lighthouseResult"]["audits"]["render-blocking-resources"]["details"]["overallSavingsMs"])

unused_javascript_ms_save.append(data_["lighthouseResult"]["audits"]["unused-javascript"]["details"]["overallSavingsMs"])

unused_javascript_byte_save.append(data_["lighthouseResult"]["audits"]["unused-javascript"]["details"]["overallSavingsBytes"])

unused_css_rules_ms_save.append(data_["lighthouseResult"]["audits"]["unused-css-rules"]["details"]["overallSavingsMs"])

unused_css_rules_bytes_save.append(data_["lighthouseResult"]["audits"]["unused-css-rules"]["details"]["overallSavingsBytes"])

The following part is to offer responsive picture advantages and server response timing.

possible_server_response_time_saving.append(data_["lighthouseResult"]["audits"]["server-response-time"]["details"]["overallSavingsMs"])

possible_responsive_image_ms_save.append(data_["lighthouseResult"]["audits"]["uses-responsive-images"]["details"]["overallSavingsMs"])

The following part is to make the perform proceed to work in case there’s an error.

else:

proceed

Instance Utilization Of Web page Pace Insights API With Python For Bulk Testing

To make use of the particular code blocks, put them right into a Python perform.

Run the script, and you’re going to get 29 web page speed-related metrics within the columns under.

Screenshot from creator, June 2022

Screenshot from creator, June 2022Conclusion

PageSpeed Insights API offers several types of web page loading efficiency metrics.

It demonstrates how Google engineers understand the idea of web page loading efficiency, and presumably use these metrics as a rating, UX, and quality-understanding viewpoint.

Utilizing Python for bulk web page velocity exams provides you a snapshot of the complete web site to assist analyze the potential person expertise, crawl effectivity, conversion price, and rating enhancements.

Extra assets:

Featured Picture: Dundanim/Shutterstock

[ad_2]

Source link

Leave a Comment