Crawling enterprise websites has all of the complexities of any regular crawl plus a number of further components that must be thought-about earlier than starting the crawl.

The next approaches present find out how to accomplish a large-scale crawl and obtain the given goals, whether or not it’s a part of an ongoing checkup or a web site audit.

Page Contents

1. Make The Web site Prepared For Crawling

An necessary factor to contemplate earlier than crawling is the web site itself.

It’s useful to repair points that will decelerate a crawl earlier than beginning the crawl.

Which will sound counterintuitive to repair one thing earlier than fixing it however in terms of actually massive websites, a small downside multiplied by 5 million turns into a big downside.

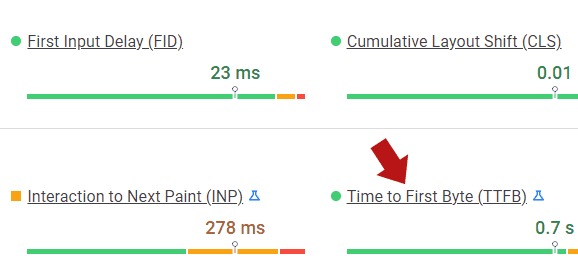

Adam Humphreys, the founding father of Making 8 Inc. digital advertising and marketing company, shared a intelligent resolution he makes use of for figuring out what’s inflicting a sluggish TTFB (time to first byte), a metric that measures how responsive an online server is.

A byte is a unit of information. So the TTFB is the measurement of how lengthy it takes for a single byte of information to be delivered to the browser.

TTFB measures the period of time between a server receiving a request for a file to the time that the primary byte is delivered to the browser, thus offering a measurement of how briskly the server is.

A technique to measure TTFB is to enter a URL in Google’s PageSpeed Insights device, which is powered by Google’s Lighthouse measurement expertise.

Screenshot from PageSpeed Insights Software, July 2022

Screenshot from PageSpeed Insights Software, July 2022Adam shared: “So a variety of instances, Core Net Vitals will flag a sluggish TTFB for pages which are being audited. To get a really correct TTFB studying one can evaluate the uncooked textual content file, only a easy textual content file with no html, loading up on the server to the precise web site.

Throw some Lorem ipsum or one thing on a textual content file and add it then measure the TTFB. The thought is to see server response instances in TTFB after which isolate what assets on the positioning are inflicting the latency.

Most of the time it’s extreme plugins that folks love. I refresh each Lighthouse in incognito and net.dev/measure to common out measurements. After I see 30–50 plugins or tons of JavaScript within the supply code, it’s virtually a right away downside earlier than even beginning any crawling.”

When Adam says he’s refreshing the Lighthouse scores, what he means is that he’s testing the URL a number of instances as a result of each take a look at yields a barely completely different rating (which is because of the truth that the pace at which information is routed by way of the Web is continually altering, identical to how the pace of visitors is continually altering).

So what Adam does is accumulate a number of TTFB scores and common them to give you a closing rating that then tells him how responsive an online server is.

If the server isn’t responsive, the PageSpeed Insights device can present an concept of why the server isn’t responsive and what must be fastened.

2. Guarantee Full Entry To Server: Whitelist Crawler IP

Firewalls and CDNs (Content material Supply Networks) can block or decelerate an IP from crawling a web site.

So it’s necessary to establish all safety plugins, server-level intrusion prevention software program, and CDNs that will impede a web site crawl.

Typical WordPress plugins so as to add an IP to the whitelist are Sucuri Web Application Firewall (WAF) and Wordfence.

3. Crawl Throughout Off-Peak Hours

Crawling a web site ought to ideally be unintrusive.

Underneath the best-case situation, a server ought to have the ability to deal with being aggressively crawled whereas additionally serving net pages to precise web site guests.

However then again, it could possibly be helpful to check how properly the server responds underneath load.

That is the place real-time analytics or server log entry shall be helpful as a result of you may instantly see how the server crawl could also be affecting web site guests, though the tempo of crawling and 503 server responses are additionally a clue that the server is underneath pressure.

If it’s certainly the case that the server is straining to maintain up then make notice of that response and crawl the positioning throughout off-peak hours.

A CDN ought to in any case mitigate the consequences of an aggressive crawl.

4. Are There Server Errors?

The Google Search Console Crawl Stats report must be the primary place to analysis if the server is having hassle serving pages to Googlebot.

Any points within the Crawl Stats report ought to have the trigger recognized and glued earlier than crawling an enterprise-level web site.

Server error logs are a gold mine of information that may reveal a variety of errors that will have an effect on how properly a web site is crawled. Of explicit significance is having the ability to debug in any other case invisible PHP errors.

5. Server Reminiscence

Maybe one thing that’s not routinely thought-about for search engine optimization is the quantity of RAM (random entry reminiscence) {that a} server has.

RAM is like short-term reminiscence, a spot the place a server shops data that it’s utilizing to be able to serve net pages to web site guests.

A server with inadequate RAM will change into sluggish.

So if a server turns into sluggish throughout a crawl or doesn’t appear to have the ability to address a crawling then this could possibly be an search engine optimization downside that impacts how properly Google is ready to crawl and index net pages.

Check out how a lot RAM the server has.

A VPS (digital non-public server) may have a minimal of 1GB of RAM.

Nevertheless, 2GB to 4GB of RAM could also be beneficial if the web site is an internet retailer with excessive visitors.

Extra RAM is mostly higher.

If the server has a ample quantity of RAM however the server slows down then the issue may be one thing else, just like the software program (or a plugin) that’s inefficient and inflicting extreme reminiscence necessities.

6. Periodically Confirm The Crawl Knowledge

Hold a watch out for crawl anomalies as the web site is crawled.

Generally the crawler could report that the server was unable to reply to a request for an online web page, producing one thing like a 503 Service Unavailable server response message.

So it’s helpful to pause the crawl and take a look at what’s occurring that may want fixing to be able to proceed with a crawl that gives extra helpful data.

Generally it’s not attending to the top of the crawl that’s the purpose.

The crawl itself is a vital information level, so don’t really feel annoyed that the crawl must be paused to be able to repair one thing as a result of the invention is an effective factor.

7. Configure Your Crawler For Scale

Out of the field, a crawler like Screaming Frog could also be arrange for pace which might be nice for almost all of customers. But it surely’ll must be adjusted to ensure that it to crawl a big web site with thousands and thousands of pages.

Screaming Frog makes use of RAM for its crawl which is nice for a standard web site however turns into much less nice for an enterprise-sized web site.

Overcoming this shortcoming is straightforward by adjusting the Storage Setting in Screaming Frog.

That is the menu path for adjusting the storage settings:

Configuration > System > Storage > Database Storage

If potential, it’s extremely beneficial (however not completely required) to make use of an inner SSD (solid-state drive) arduous drive.

Most computer systems use a regular arduous drive with shifting components inside.

An SSD is probably the most superior type of arduous drive that may switch information at speeds from 10 to 100 instances sooner than an everyday arduous drive.

Utilizing a pc with SSD outcomes will assist in reaching an amazingly quick crawl which shall be crucial for effectively downloading thousands and thousands of net pages.

To make sure an optimum crawl it’s essential to allocate 4 GB of RAM and not more than 4 GB for a crawl of as much as 2 million URLs.

For crawls of as much as 5 million URLs, it is recommended that 8 GB of RAM are allotted.

Adam Humphreys shared: “Crawling websites is extremely useful resource intensive and requires a variety of reminiscence. A devoted desktop or renting a server is a a lot sooner methodology than a laptop computer.

I as soon as spent virtually two weeks ready for a crawl to finish. I discovered from that and obtained companions to construct distant software program so I can carry out audits wherever at any time.”

8. Join To A Quick Web

If you’re crawling out of your workplace then it’s paramount to make use of the quickest Web connection potential.

Utilizing the quickest obtainable Web can imply the distinction between a crawl that takes hours to finish to a crawl that takes days.

Generally, the quickest obtainable Web is over an ethernet connection and never over a Wi-Fi connection.

In case your Web entry is over Wi-Fi, it’s nonetheless potential to get an ethernet connection by shifting a laptop computer or desktop nearer to the Wi-Fi router, which comprises ethernet connections within the rear.

This looks like a type of “it goes with out saying” items of recommendation however it’s straightforward to miss as a result of most individuals use Wi-Fi by default, with out actually fascinated with how a lot sooner it could be to attach the pc straight to the router with an ethernet wire.

9. Cloud Crawling

An alternative choice, notably for terribly giant and complicated web site crawls of over 5 million net pages, crawling from a server may be the best choice.

All regular constraints from a desktop crawl are off when utilizing a cloud server.

Ash Nallawalla, an Enterprise search engine optimization specialist and writer, has over 20 years of expertise working with a number of the world’s largest enterprise expertise corporations.

So I requested him about crawling thousands and thousands of pages.

He responded that he recommends crawling from the cloud for websites with over 5 million URLs.

Ash shared: “Crawling large web sites is finest achieved within the cloud. I do as much as 5 million URIs with Screaming Frog on my laptop computer in database storage mode, however our websites have much more pages, so we run digital machines within the cloud to crawl them.

Our content material is widespread with scrapers for aggressive information intelligence causes, extra so than copying the articles for his or her textual content material.

We use firewall expertise to cease anybody from accumulating too many pages at excessive pace. It’s adequate to detect scrapers appearing in so-called “human emulation mode.” Subsequently, we will solely crawl from whitelisted IP addresses and an additional layer of authentication.”

Adam Humphreys agreed with the recommendation to crawl from the cloud.

He stated: “Crawling websites is extremely useful resource intensive and requires a variety of reminiscence. A devoted desktop or renting a server is a a lot sooner methodology than a laptop computer. I as soon as spent virtually two weeks ready for a crawl to finish.

I discovered from that and obtained companions to construct distant software program so I can carry out audits wherever at any time from the cloud.”

10. Partial Crawls

A method for crawling giant web sites is to divide the positioning into components and crawl every half in keeping with sequence in order that the result’s a sectional view of the web site.

One other technique to do a partial crawl is to divide the positioning into components and crawl on a continuous foundation in order that the snapshot of every part isn’t solely saved updated however any modifications made to the positioning may be immediately seen.

So slightly than doing a rolling replace crawl of your complete web site, do a partial crawl of your complete web site based mostly on time.

That is an strategy that Ash strongly recommends.

Ash defined: “I’ve a crawl occurring on a regular basis. I’m working one proper now on one product model. It’s configured to cease crawling on the default restrict of 5 million URLs.”

After I requested him the explanation for a continuous crawl he stated it was due to points past his management which may occur with companies of this dimension the place many stakeholders are concerned.

Ash stated: “For my scenario, I’ve an ongoing crawl to handle recognized points in a selected space.”

11. Total Snapshot: Restricted Crawls

A technique to get a high-level view of what a web site appears like is to restrict the crawl to only a pattern of the positioning.

That is additionally helpful for aggressive intelligence crawls.

For instance, on a Your Cash Or Your Life challenge I labored on I crawled about 50,000 pages from a competitor’s web site to see what varieties of websites they had been linking out to.

I used that information to persuade the consumer that their outbound linking patterns had been poor and confirmed them the high-quality websites their top-ranked rivals had been linking to.

So generally, a restricted crawl can yield sufficient of a sure form of information to get an total concept of the well being of the general web site.

12. Crawl For Web site Construction Overview

Generally one solely wants to grasp the positioning construction.

With a view to do that sooner one can set the crawler to not crawl exterior hyperlinks and inner photographs.

There are different crawler settings that may be un-ticked to be able to produce a sooner crawl in order that the one factor the crawler is specializing in is downloading the URL and the hyperlink construction.

13. How To Deal with Duplicate Pages And Canonicals

Except there’s a purpose for indexing duplicate pages, it may be helpful to set the crawler to disregard URL parameters and different URLs which are duplicates of a canonical URL.

It’s potential to set a crawler to solely crawl canonical pages. But when somebody set paginated pages to canonicalize to the primary web page within the sequence you then’ll by no means uncover this error.

For the same purpose, not less than on the preliminary crawl, one may need to disobey noindex tags to be able to establish situations of the noindex directive on pages that must be listed.

14. See What Google Sees

As you’ve little question seen, there are a lot of other ways to crawl a web site consisting of thousands and thousands of net pages.

A crawl funds is how a lot assets Google devotes to crawling a web site for indexing.

The extra webpages are efficiently listed the extra pages have the chance to rank.

Small websites don’t actually have to fret about Google’s crawl funds.

However maximizing Google’s crawl funds is a precedence for enterprise web sites.

Within the earlier situation illustrated above, I suggested towards respecting noindex tags.

Effectively for this type of crawl you’ll really need to obey noindex directives as a result of the purpose for this type of crawl is to get a snapshot of the web site that tells you ways Google sees your complete web site itself.

Google Search Console gives a number of data however crawling a web site your self with a consumer agent disguised as Google could yield helpful data that may assist enhance getting extra of the correct pages listed whereas discovering which pages Google may be losing the crawl funds on.

For that form of crawl, it’s necessary to set the crawler consumer agent to Googlebot, set the crawler to obey robots.txt, and set the crawler to obey the noindex directive.

That method, if the positioning is about to not present sure web page components to Googlebot you’ll have the ability to see a map of the positioning as Google sees it.

It is a nice technique to diagnose potential points equivalent to discovering pages that must be crawled however are getting missed.

For different websites, Google may be discovering its technique to pages which are helpful to customers however may be perceived as low high quality by Google, like pages with sign-up varieties.

Crawling with the Google consumer agent is helpful to grasp how Google sees the positioning and assist to maximise the crawl funds.

Beating The Studying Curve

One can crawl enterprise web sites and discover ways to crawl them the arduous method. These fourteen ideas ought to hopefully shave a while off the training curve and make you extra ready to tackle these enterprise-level purchasers with gigantic web sites.

Extra assets:

Featured Picture: SvetaZi/Shutterstock

Leave a Comment